Getting visitors to your website is one milestone, but turning them into customers, subscribers, or leads is where real success lies. Conversion optimization is not about guesswork, but it requires a scientific approach backed by data. Businesses often face the same problem: plenty of visitors come to their site, but too few take the desired action. The question is: why?

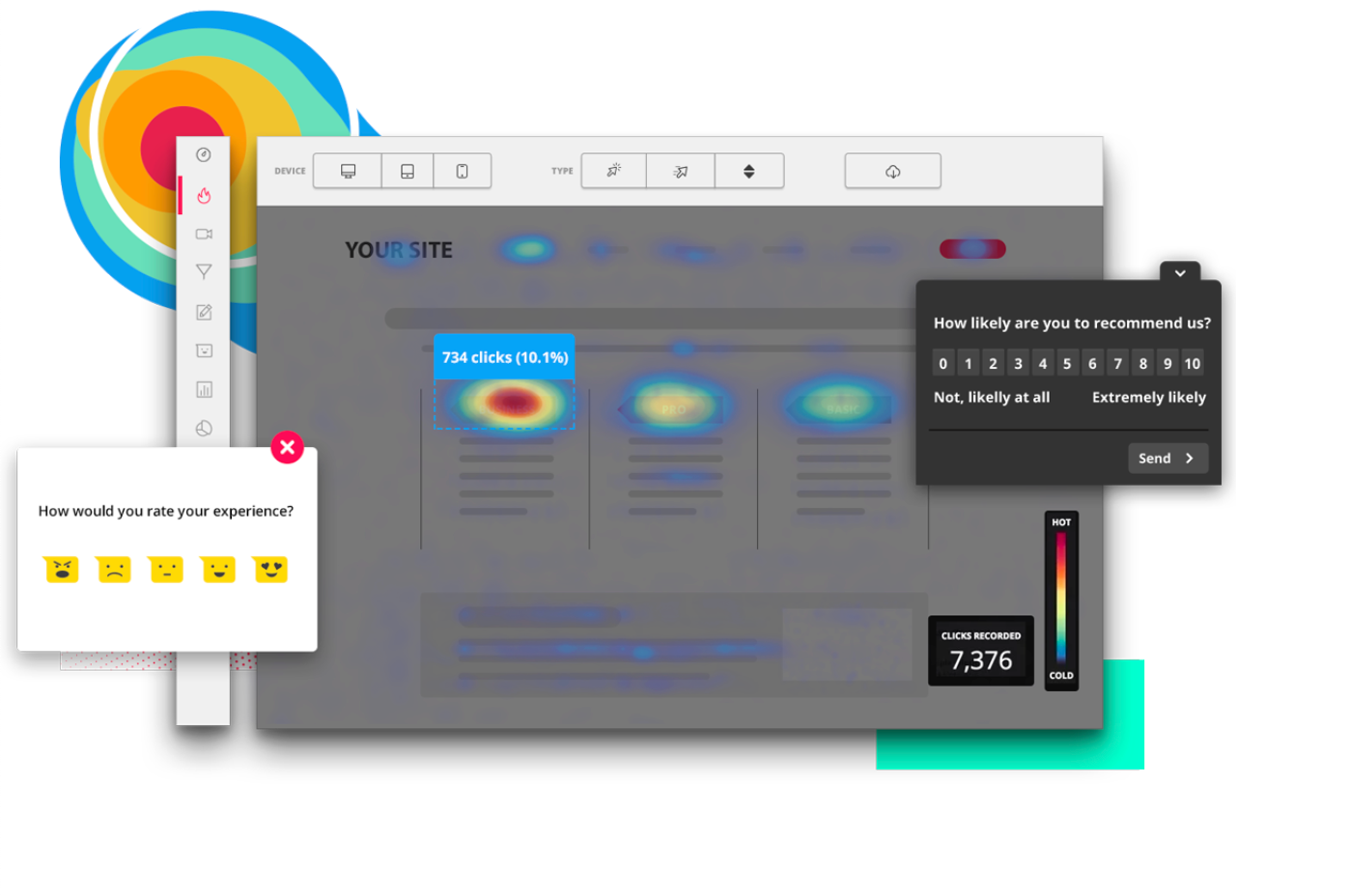

This is where heatmaps and A/B testing come into play. Both are tools designed to improve conversion rates, but they work in different, complementary ways. Heatmaps provide a visual representation of user behavior, showing you what people do on a page where they click, scroll, or lose interest. A/B testing, on the other hand, goes one step further by experimenting with different versions of a page or element to determine which performs better.

Comparing these two tools helps marketers, designers, and business owners decide when to use one, when to use the other, and most importantly, how to combine them for maximum impact. In this article, we’ll dive deep into their differences, strengths, weaknesses, and use cases, and explore how together they create a powerful cycle of continuous optimization.

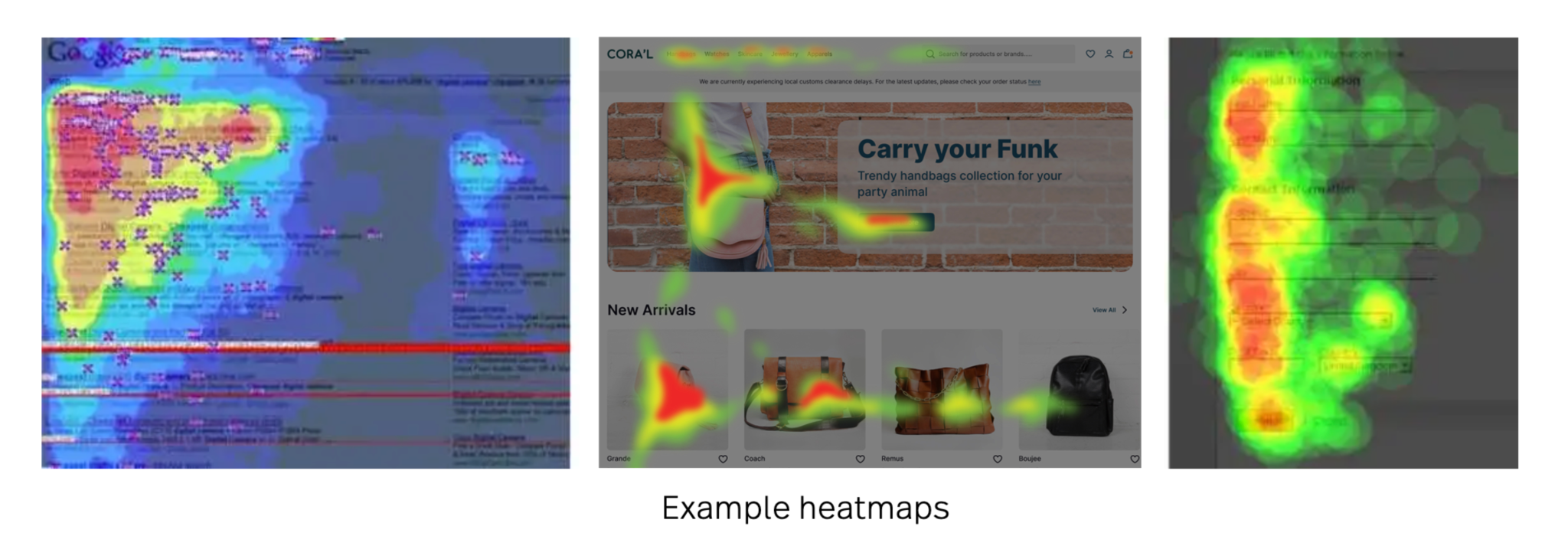

A heatmap is essentially a diagnostic tool. It doesn’t change anything on your website but instead collects data about how visitors interact with your pages. The output is a color-coded visual that highlights activity, with “hot” areas (red, orange, yellow) showing the most engagement and “cold” areas (blue, green) showing the least.

Heatmaps provide quick, visual insights that even non-technical team members can understand. Instead of looking at numbers in a spreadsheet, you can literally “see” where users are focusing their attention. For instance, if a key button is cold blue, that’s an immediate signal that it isn’t attracting clicks.

They are especially useful when:

Despite their usefulness, heatmaps have boundaries:

Where heatmaps stop, A/B testing begins. A/B testing is all about experimentation. Instead of observing current behavior, it actively changes a variable and measures whether the new version performs better.

A/B testing allows businesses to make evidence-based decisions instead of relying on intuition or design trends. For example, while a designer may prefer a minimalist button, the test might show that a bold, contrasting button color drives significantly more conversions.

Although both tools share the same goal, improving conversions, they differ in approach, output, and purpose.

Put simply: heatmaps are the detective; A/B testing is the courtroom trial. One gathers clues, the other proves the case.

Think of it this way: If you’re a doctor, heatmaps are the X-ray showing where the problem lies, and A/B testing is the treatment plan tested on patients. The two methods are complementary: diagnosis without treatment leaves the issue unresolved, while treatment without diagnosis risks targeting the wrong problem. By combining both, you ensure accuracy in identifying issues and confidence in applying the right fixes.

Instead of treating heatmaps and A/B testing as competing tools, smart businesses use them together. Both tools cover gaps left by the other, heatmaps identify what’s going wrong, and A/B testing confirms the right solution. This creates a closed loop of continuous improvement rather than relying on guesswork or incomplete data.

This cycle ensures that optimizations aren’t based on guesswork but on observed behavior followed by proven results. It encourages an ongoing culture of testing and learning, where each insight builds on the previous one to drive long-term growth. Over time, this systematic approach can transform small tweaks into significant, compounded gains.

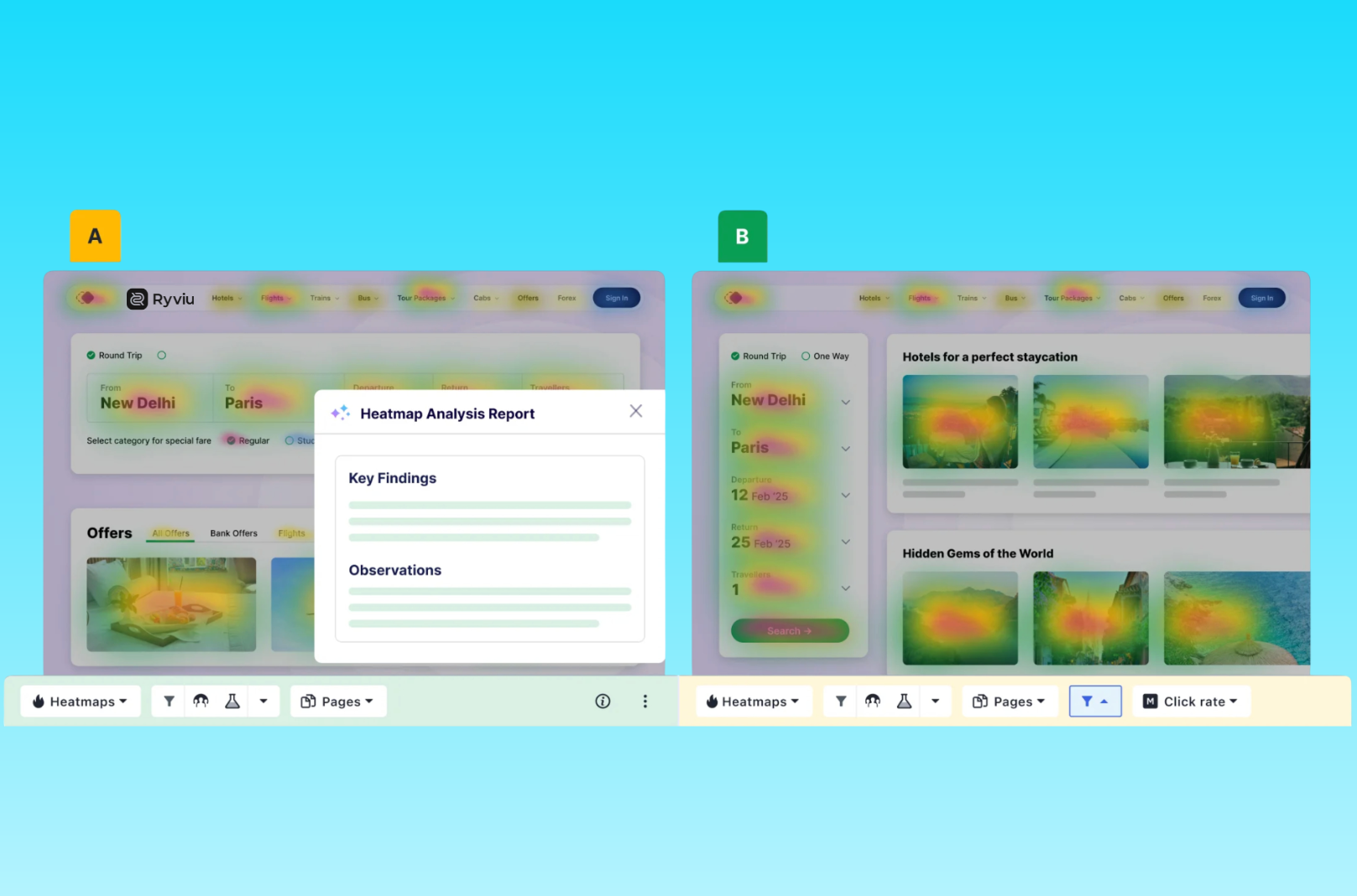

Instead of juggling multiple tools, some platforms offer both heatmaps and testing:

Using integrated tools streamlines workflows and ensures insights turn into action faster.

Heatmaps and A/B testing are two sides of the same conversion optimization coin. Heatmaps are powerful for diagnosing problems, while A/B testing validates which changes deliver measurable results.

When compared, it’s clear each has strengths and weaknesses. But when combined, they create a continuous loop of improvement: observe → hypothesize → test → refine.

In a competitive digital landscape, relying on guesswork is no longer enough. Businesses that use both heatmaps and A/B testing systematically can uncover hidden obstacles, validate their solutions, and steadily increase conversions over time. The smartest approach is to integrate both into your optimization toolkit.

To understand real impact, brands need to look deeper—at behavior, intent, and downstream outcomes. This article explores how to measure lookbook performance beyond clicks and how those insights can guide smarter design, merchandising, and conversion strategies.

What actually shapes buyer confidence today is review distribution: how ratings are spread across 1 to 5 stars and what those reviews say together. Understanding this shift is critical for brands that want to convert browsers into buyers without relying on inflated numbers.

This article breaks down high-ticket and low-ticket dropshipping in depth, exploring their advantages, drawbacks, and real-world trade-offs so you can choose the model that fits your business—not just what sounds attractive on paper.